|

On Cumulative Results of Quantum Measurements |

|

|

|

Suppose a spin-zero particle decays into two spin-1/2 particles. Conservation of angular momentum requires that if the spins of these two particles are both measured with respect to any given angle, the particles will necessarily exhibit opposite spins. Thus, the "exhibited spin vectors" point in opposite directions parallel to the measurement angle, and their vector sum is zero. However, suppose we measure the spins of these two particles with respect to different angles. If a particle's spin is "measured" along a certain axis, it will necessarily "exhibit" spin that corresponds to a vector parallel to the axis of measurement, in either the positive or negative sense, so by measuring the spins of the two particles along different axes we can ensure that their exhibited spins are not parallel, and hence they cannot sum to zero. This explains how, beginning with a spin-zero particle, we can end up with two particles that interact with the surroundings by exhibiting spins whose vector sum is not zero. It might be thought that this presents a problem for the conservation of angular momentum, but of course the two particles continue to possess spin following their interactions with the measuring devices, so the "total spin" imparted to the surroundings is not just the vector sum of the spins exhibited by the two initial measurements. Also, a quantum spin "exhibition" doesn't actually impart quantum spin to the surroundings, so the conservation at issue is more subtle. |

|

|

|

Nevertheless, we might argue that the vector sum of all future "spin exhibitions" by those two particles must be zero. Admittedly, the empirical content of this assertion is unclear, similar to claiming that the total number of heads will equal the total number of tails for all the tosses of a coin. We can never actually evaluate the sums – at least not prior to the "completion" of the universe. To give meaning to the assertion, we would need to specify a more robust limit, such as a limit on how far from zero the total sum could become in a finite length of time. Still, it's interesting that such limits place constraints not only on the behavior of the particles themselves but also on the circumstances they will encounter, e.g., the measurements to which they are subjected. For example, if one of the particles is measured once along a direction x, it would not be acceptable for all other exhibitions of spin of the two particles to be along an axis perpendicular to x, because then the results could never sum to zero. Thus a principle of conservation of "exhibited spin vectors" would eventually restrict our "choice" of measurement angles as well as the particles' responses to those angles. |

|

|

|

It might be argued that we could accept violations of this postulated conservation law as long as they were within the original quantum uncertainty limits, and that this tolerance might be adequate to eliminate any effective requirements on the "environment". However, if we simply monitor the exhibited spins of the two particles for a period of time, the net vector sum would essentially be a random walk, which can reach positions arbitrarily far from "zero" in any particular direction. This would then require the environment to give those particles a sufficient number of opportunities to exhibit spin along that direction, so that it can ultimately get back to (or even close to) zero. |

|

|

|

In any case, it's interesting that the "net spin vector" resulting from (the initial) measurements of the two spin-1/2 particles discussed above is different depending on whether we assume the pair correlations predicted by quantum mechanics or those predicted by a typical "realistic" model. If we represent the individual exhibited spin vectors by unit complex numbers and assign one of the measurement directions to the number 1, we can express the "net spin vector" for a given angle θ between the two measurement axes as |

|

|

|

|

|

|

|

where C(θ) is the correlation between the two measurements with a difference angle of θ. This is just the weighted average of the two possible outcomes for this value of θ, and it reduces to |

|

|

|

|

|

|

|

Our two candidates for the correlation function C(θ) are |

|

|

|

|

|

|

|

Substituting these into the preceding equation for S(θ) gives the expressions for the net spin vector resulting from combined spin measurements on two coupled particles |

|

|

|

|

|

|

|

Splitting up these expressions into their real and imaginary parts we have |

|

|

|

|

|

|

|

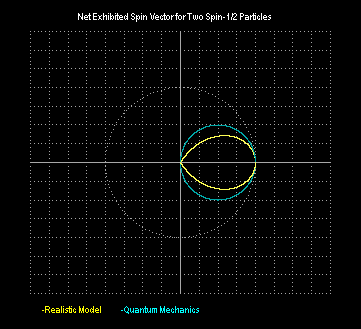

We could then multiply each of these by exp(iϕ) where ϕ is the angle of our first measurement (which we arbitrarily assigned to the number 1), but this just shows that there is circular symmetry of the overall outcome, so we'll focus on just the relative distribution of net spin vectors. The results are illustrated below. |

|

|

|

|

|

|

|

The quantum mechanical distribution is a perfect circle centered on the point (1/2,0), whereas the simple realistic model gives a "teardrop" shaped distribution. The density of the quantum mechanics distribution is uniform as a function of θ, with a 2:1 counter-rotating relationship as illustrated below |

|

|

|

|

|

|

|

The density of the simple realistic model is given by counter-rotating directions with an overall 2:1 ratio, but it is non-uniform over the distribution. Taking these respectively as the discrete "steps" for random walks, we can compare the long-term "positions", and the difference in positions, of these two distributions, for a given sequence of q and w values. It would be interesting to know if these two distributions of "steps" leads to significantly different random walk characteristics. I believe the mean step size is larger for the simple realistic model, because it's distributed more heavily on the outer rim, but the few numerical trials I've done haven't shown any noticeably greater tendency for the realistic model to diverge. |

|

|

|

As noted above, the net exhibited spin resulting from measurements of two spin-1/2 particles taken along directions that differ by the angle θ is given by (1), where C(θ) is the correlation (0 to 1) between the measurements. It’s easy to show that the average magnitude of S(θ) versus the angle θ using the quantum mechanical correlation function is exactly 2/π = 0.636619... times the unit spin vector, whereas the linear "realistic" correlation gives an average net spin of 0.65733... spin units, which is about 3% greater. Therefore, the rate of divergence of net exhibited spin (based on a random walk with these step sizes) is less based on the quantum mechanical correlation than based on the linear “realistic” correlation. This raises an interesting question: Of all monotonic functions C(q) passing through the points (0,0), (π/2,1/2) and (π,1), which of them minimizes the exhibited spin divergence? |

|

|

|

Since the correlation is really just a function of the absolute value of θ, it's clear that C is an "even" function, i.e., C(θ) = C(−θ). The magnitude of the complex number S(θ) is given by |

|

|

|

|

|

|

|

Therefore, in summary, we want the function C(θ) such that |

|

|

|

|

|

|

|

and such that the mean of M(θ), i.e., the integral |

|

|

|

|

|

|

|

is minimized. If we denote the integrand by F(θ,C,C′) we can determine the function C(θ) that makes this integral stationary by the calculus of variations. Euler's equation gives |

|

|

|

|

|

|

|

Since ∂F/∂C′ = 0 we have |

|

|

|

|

|

|

|

Therefore, the unique correlation function for spin-1/2 particles that minimizes the divergence of exhibited spin is given by the function that makes the numerator vanish, which implies |

|

|

|

|

|

|

|

This is the quantum mechanical prediction for the correlation. Thus the correlation between spin measurements of two spin-1/2 particles as a function of the relative angle of measurement can be derived from a variational principle. Specifically, the correlation function predicted by quantum mechanics can be shown to minimize the mean step-size of the cumulative net spin vector exhibited by the particles over all possible relative angles of measurement. |

|

|

|

More generally, it appears that this principle (minimizing the net unconserved quantities over the range of possible interactions) underlies all quantum mechanical processes. Adapting Kant's term, we might describe this principle as the "categorical imperative", i.e., each individual particle's exhibited behavior is governed by the rule that would have to be followed by all such particles in equivalent circumstances in order to minimize the overall divergence in the evolution of the net "exhibited" quantities. |

|

|

|

To see how this enters into the general quantum process, recall that in every measurement there is a degree of uncertainty depending on the precise manner in which the measurement is taken, i.e., the "basis" onto which we project the state vector to give the probabilities of the various possible discrete outcomes. The issue of conservation arises when we consider the interaction of two or more subsystems. The crucial point is that we're free to select the bases for our measurements of these various subsystems independently, and therefore the bases are not, in general, parallel. As a result, the exhibited behaviors of the subsystems will not, in general, be equal and opposite, and so each set of measurements represents a step in a random walk around the point of strict conservation. |

|

|

|

We described this in detail for the case of quantum spin, where it was shown that the requirement to minimize the divergence from strict conservation of "exhibited spin" leads directly to the quantum mechanical prediction for the correlation of spin measurements of coupled particles. Can this same argument be used to derive the quantum mechanical predictions for all quantum processes. If so, it’s interesting, because it singles out - more or less from first principles - the pattern of behavior that yields the least divergent evolution of net exhibited quantities for any prescribed set of measurements. This hypothetical pattern of behavior would be identifiable and significant as a limiting case even if we knew nothing about quantum mechanics. Also, the fact that this evolution ultimately implies constraints on the supposedly free choices of measurement bases (as discussed above) is intriguing. In addition, the unavoidable evolution of net exhibited quantities, even in the context of a "conservative process", is suggestive of a fundamental temporal asymmetry. |

|

|

|

We’ve dealt explicitly with only a very simple special case, namely an idealized two-particle EPRB experiment. The result is consistent with - but certainly don't prove - the more sweeping hypothesis that quantum mechanics in general predicts joint probabilities for the results of measurements such that the net overall divergence of our classical "conserved quantities" is minimized when evaluated over all possible measurements. On the other hand, this hypothesis has a plausibility from general considerations that extend beyond the simple EPRB context. In a way it can be regarded as an extension (and quantification) of the traditional "correspondence principle", although it shifts the focus from the density of the wave function to the distribution of the actual results of measurements and interactions. (This shift has some inherent advantages, considering the dubious ontological status of the wave function.) |

|

|

|

We also recognize that the density matrix in quantum mechanics is not conserved, but our hypothesis doesn't identify the "quantity" with a density matrix. It begins with a classical "conserved" quantity, like angular momentum, which on the level of individual quantum interactions is not strictly conserved (in the sense that different parts of an "entangled" system may interact with "non-parallel" measurements, so the net measured quantities don't fully cancel). Quantum mechanics predicts a certain distribution for the results of measurements (over identically prepared systems) for whatever measurements we might choose to make. Thus, quantum mechanics gives predictions over the entire "space" of possible interactions that the system could encounter. |

|

|

|

The hypothesis (confirmed in the case of simple EPRB situations) is that if we evaluate the expected net "residual" un-balanced sum of the predicted results of measurements of the particular quantity (e.g., momentum) uniformly over the space of possible interactions, the quantum mechanical predictions (among all possible joint distributions) yield the minimum possible net un-balance. |

|

|

|

Admittedly there are a number of ambiguities in this statement. For example, it's easy to say what "uniformly" means for the space of possible measurements in a simple EPRB experiment (where all measurements are simple angles about a common axis), but in more complicated situations it may be less clear how to define "equi-probable measurements". Also, one can imagine counter-examples to the claim of minimization, based on degenerate laws (e.g., every measurement of every kind always gives a null result), so in order to claim the quantum mechanical relations are variational minimums we would need to stipulate some non-degenerate structure. |

|

|

|

On a trivial level, one could challenge even the simple EPRB result by saying that if nature really wanted to minimize the net un-balanced spin it would simply have created all particles without spin, so they'd glide through a Stern-Gerlach apparatus undisturbed. On the other hand, this is sort of in the category of "who ordered that?", i.e., quantum mechanics itself doesn't predict all the structure on which it operates, including the masses of particles, etc. True, there are differing opinions on whether (relativistic) quantum mechanics "predicts" spin-1/2 particles, but it certainly doesn't predict the entire standard model. It goes without saying that our theories rely on quite a bit of seemingly arbitrary structure. |

|

|

|

It might be argued that this approach can give, at best, only a partial account of the state of an entangled system, but this isn't obvious to me. The constraint of minimizing the divergence of conserved quantities over the entire space of possible measurements/ interactions is actually quite strong. Remember this is the space of all possible joint measurements. Admittedly it might be exceedingly difficult to evaluate in complicated situations, but it could well be a sufficient constraint to fully define the system. |

|

|

|

It is sometimes said that the existence of entanglement in quantum mechanics is "explained" by the fact that a function f(x,y) of two variables need not be the product g(x)h(y) of two functions of the individual variables separately. One could debate the extent to which this really constitutes an explanation for why entanglement exists, or merely a description or characterization of entanglement. I see it more as the latter, but then I'm not a good judge of explanations for "why X exists" for any X. Even if it could be shown that the laws of quantum mechanics can be derived in the way I've suggested (with some suitable definition of uniformity over the space of all possible measurements, etc), I'd still be reluctant to claim that it explains why entanglement exists. On the other hand, since it implies that entanglement serves to minimize certain functions related to classical conserved quantities, it could be seen as having some kind of explanatory value. |

|

|