|

Differences Between Normal Samples |

|

|

|

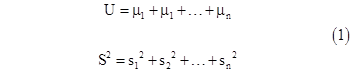

We often need to know the probability that the range of n samples drawn from a given normally distributed population will exceed a certain value. A special case of this is with n = 2, which can be treated as simply finding the distribution of the differences between two normally distributed populations. It's well known that the sum (or difference, by simply negating one or more of the distributions) of n normally distributed random variables with means µ1, µ2, ... , µn and standard deviations s1, s2, …, sn is also a normally distributed random variable with mean and standard deviation given by |

|

|

|

|

|

|

|

Hence the (signed) difference between two standard normal random variables is normally distributed with a mean of zero and standard deviation of √2. In other words, it's the density of the difference is |

|

|

|

|

|

|

|

Of course, the unsigned difference has twice this density, restricted to the range x > 0. |

|

|

|

The additivity of normal distributions according to equations (1) is so familiar that we often assume it's self-evident, but it's interesting to review how this additivity (which is closely related to the central limit theorem and the special properties of the normal distribution) is actually proven. To illustrate, let's just take the simple case of finding the distribution of the difference between 2 standard normal random variables. Letting f(t) denote the normal density function, the probability that two random samples t1 and t2 will differ by more than u can be expressed as |

|

|

|

|

|

|

|

Now, if we let F(x) denote the normal probability function given by integrating the normal density function |

|

|

|

|

|

|

|

and if we note the equality of the two terms inside the square brackets of the prior expression, we have |

|

|

|

|

|

|

|

Differentiating 1 minus this function with respect to x gives the density distribution |

|

|

|

|

|

|

|

which can be evaluated explicitly to give the unsigned density distribution |

|

|

|

|

|

|

|

This confirms what we already knew, namely, that the density distribution of the difference between two samples from a standard normal distribution is just a scaled version of the standard normal density, i.e., |

|

|

|

|

|

|

|

It follows that the probability that the difference between two random samples t1,t2 from a standard normal distribution will exceed x is exactly |

|

|

|

|

|

|

|

Tables of the normal density integral F (or sometimes 1–F) are given in many statistics books, so this formula is convenient for evaluating the probability of differences of various magnitudes. |

|

|

|

Notice that this is a special case of the more general problem of finding the probability density function for the range of n samples, i.e., the difference between the max and min values of n samples drawn from a population with density f(x) and distribution F(x). In this case it's more convenient to express the generalization of (2) in terms of the probability that the range of n samples will be less than x, which is given by the integral |

|

|

|

|

|

|

|

However, for n greater than 2, this integral cannot be evaluated in closed form (as far as I know), nor expressed simply in terms of the standard normal functions, so it must be evaluated numerically. |

|

|